Five Ways Your Site Is Hurting Your SEO

When it comes to SEO, everyone wants to be at the top. After all, higher rankings mean more traffic, exposure, and conversions, right? Search engine results pages are constantly evolving, but it’s still true that having real estate at the top of the results can greatly benefit your business.

In most cases, your site needs to provide an exceptional experience for users in order to land in one of the engines’ top spots. An extraordinary user experience, both in terms of content and site functionality, will result in a better experience for search engines and, eventually, higher rankings.

You might not be aware of it, but your site could be damaging your SEO. If you want to land at the top of the heap, you’ll need to be on the lookout for issues. Here are five of the most common ways your site could be hindering your search engine optimization efforts:

1. It’s Not Mobile Friendly

We’ve talked a lot about this on the Papercut blog before, but it’s becoming more important than ever. Just last week, Google announced that over half of its searches worldwide are conducted on mobile devices. This means that if your site isn’t mobile friendly, it’s behind the times. Mobile visitors landing on your non-mobile site are likely going to be unhappy with the experience and leave. This isn’t good, especially considering that search engines track this type of behavior. Plus, given Google’s recent mobile update, mobile-friendly sites now benefit in mobile search results. So, responsive or separate mobile site, whichever you choose, make sure mobile users are delighted with your website.

2. It Has Slow Load Times

Google has said that page load time is a ranking factor in its algorithm, and it’s definitely something you should pay attention to. Sometimes loading issues can be caused by server problems, but if your pages are continually slow, it may be an issue of large images and videos or even a problem with your site’s code. This can adversely affect your site’s user experience, and its effects can trickle down to your rankings. If you notice that your site is frequently slow to load, get in touch with your developers and find a fix. Google provides Page Speed Insights to help developers pinpoint and correct issues on both mobile and desktop versions of a site.

3. It Has a Lot of Duplicate Content

If you know anything about SEO, chances are good you’ve heard about duplicate content before. While there’s not really an algorithmic penalty for it per se, that doesn’t mean it’s not a problem. Duplicate content can cause issues for your users when they see the same chunks of content in multiple places across your site. That looks kind of odd, no? For search engines, the problem is that it confuses them. When the same content is in two places on your site or appears on your site and someone else’s, the search engines can’t always tell which version should be ranked and who should get the “credit” for it. Duplicate content in its extreme can be downright plagiarism or “scraping,” and thankfully, the search engines have gotten pretty good at catching this. There are some more subtle things that can cause duplicate content on your site, though. Sometimes a content management system will inadvertently create more than one URL for a single piece of content and… Boom. You’ve got duplicate content on your site. Other times, your site may be accessible by both a non-www and a www URL. This is also duplicate content because the engines recognize them as separate sites. If you’re running into this issue, your developer can help you correct it through 301 redirects or another form of canonicalization.

4. It Has Too Many Low-quality Pages

If your site has a significant number of low-quality pages with thin content, it’s suffering from what Rand Fishkin at Moz calls a “cruft” problem. These pages don’t serve your user because there’s no content there to help them. Again, they’ll likely land there and leave immediately, causing you additional user experience problems. Another important note is that a significant number of these pages can set you up for problems with Google’s Panda update, which was designed to correct thin content and content quality issues. Aside from these problems, low-quality pages can waste a search engine’s resources and crawl budget. They’ll spend time crawling bad pages with no benefit (sometimes at the expense of your valuable pages), and if they see a large number of bad pages on your site, they may dock you for it.

5. It’s Blocking Crawlers

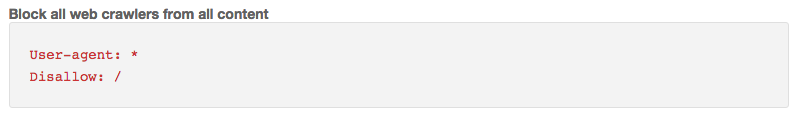

This is the biggie. It’s probably the absolute worst thing that can happen to your site in terms of SEO and, unfortunately, it happens more often than you think. Sites are particularly vulnerable to this problem during the development phase. Websites have what are known as robots.txt files, and these allow developers to send directives to search engine crawlers, telling them what content they can crawl and index and what they should avoid. Ideally, dev sites are not indexed. In order to prevent crawling and indexation, some developers will use a disallow command in the robots.txt file. The problem is, sometimes they forget to remove this when the site goes live, and disaster ensues. Having your site verified in Google’s Search Console (formerly Webmaster Tools) will help you spot these problems. You can even check your site’s robots.txt file by yourself by going to www.yoururl.com/robots.txt. If you see something like the image below, you’ve got trouble. Get with your web company immediately and get it fixed!

Image credit: Moz.com

Keep Your Eyes Out

So, now you know five of the most common problems that can plague your site in terms of SEO. Remember that you can encounter many of these issues at any point in the lifetime of your site. It pays to be vigilant and to check on your site frequently. Keep improving your experience and making your users happy, and you’re a lot more likely to reap the benefits of higher organic rankings.